DL Series: What is Deep Learning?

*One of the subfields of machine learning (ML), deep learning (DL) is focused on artificial neural networks, which are inspired by the structure and function of the human brain. Deep learning attempts to mimic the brain by enabling systems to cluster data and make extremely accurate predictions. With that said, these artificial neural networks are still a far ways from achieving the same level of computation as their human counterpart. One of the reasons for the divide is that deep learning requires orders of magnitude more data to work.

Deep Learning vs. Machine Learning

Before fully understanding deep learning, it is first important to distinguish it from machine learning. There are a few key differences between the two.

Machine learning often requires more human intervention to produce results, such as labeling and classifying input data. On the other hand, deep learning systems can work with much larger datasets and feature set sizes, even if they are unlabeled. Deep learning systems also require far more powerful hardware and resources, taking more time to set up. One more key difference between the two is that deep learning requires more expertise to carry out tasks like neural network architecture selection and hyperparameter optimization.

While it is not always the case, machine learning traditionally requires structured data, while deep learning can work with much larger volumes of unstructured data.

Structured vs. Unstructured data (Image: AI WIki)

Deep learning algorithms are also able to distinguish the features between pieces of data, while machine learning relies on human experts to carry out this process. The deep learning algorithms then continuously adjust themselves to become more accurate in their next predictions.

Mimicking the Human Brain

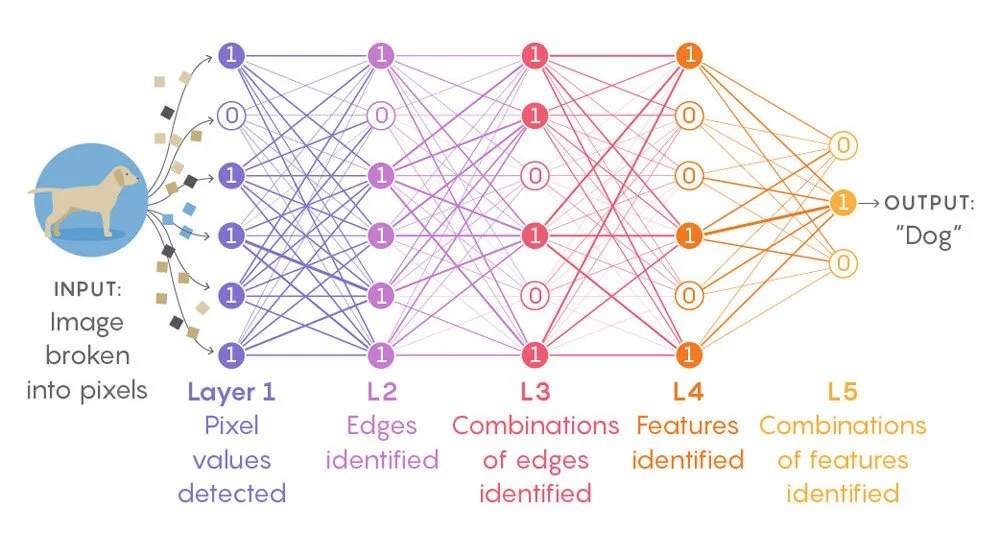

Now that we have described the major differences between deep learning and classical machine learning, it is important to look at how the former operates. Deep learning neural networks attempt to mimic the human brain, and they do this through data inputs, weights, and bias. All of these result in the networks collaborating with one another to identify, classify, and describe objects within the unstructured data.

As for the structure of deep neural networks, they consist of multiple layers of interconnected nodes. Each one of these nodes builds upon the previous layer, which results in refined and optimized predictions. As these computations progress through the network, they are undergoing a process referred to as forward propagation.

Deep neural networks also have input and output laters. In the input layer, the deep learning model ingests the data before processing it, while in the output layer, the final prediction or classification is made. With that said, there are a number of different architectures for neural networks based on the number of layers, type of cells, connections between layers, and more.

You can review the many types of neural network architectures here.

So how do these deep neural networks calculate and correct errors? This is done through yet another process called backpropagation, which uses algorithms to calculate the errors in predictions. The networks can then adjust the weights and biases [1] of the function by moving backwards through the layers, all while training the model to be more accurate.

The process results in a back and forth:

Make predictions.

Work backwards to identify and correct errors.

Make new predictions.

Repeat.

This results in the algorithm becoming more accurate each time.

Function of layers within a neural network (Image: Wandb.ai)

In the upcoming installments of this DL Series, we will cover the specific types of neural networks, such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs), and go much deeper into all the aspects of deep learning.

Deep Learning Applications

Deep learning algorithms can be applied in many ways throughout different fields.

Let’s take a look at some of the most popular deep learning applications:

Self-driving cars: Deep learning is key to autonomous vehicles. Massive data sets are fed to a model, and the machines are trained to learn and test the results in an environment.

News aggregation: Deep learning is used to define a reader's personality while filtering out news based on geographical, social, and economic parameters.

Fraud detection: Anomalies can be detected by deep learning algorithms in order to prevent fraud.

Computer vision: Deep learning is often used to train vision-based AI programs.

NLP/NLG: Natural language processors can identify complex patterns in sentences through the use of deep learning algorithms, as well as be used to generate coherent text.

The Importance of Deep Learning

Deep learning is one of the most impressive advancements within the field of AI and machine learning. It allows us to process massive amounts of unstructured data, identifying patterns that could lead to great insights. As such, it is particularly well suited to modern data, where structure is not naturally present and human intervention is not possible.

Deep learning is still in its infancy when compared to artificial intelligence and machine learning. It has progressed rapidly over the last few years and has enormous potential with its advancements in cloud computing, data explosion, and in the future of quantum computing.

Major tech companies are investing many resources into developing deep learning since it is crucial to creating intelligent machines. It is also impacting nearly every sector in some way, and it will continue to do so as we move further into an AI-driven world.

Now that you know how deep learning is different from classical machine learning, it’s time to move onto the specifics of each type of popular deep learning network. Make sure to look out for the upcoming installments of this DL series, which will cover the different types of deep learning networks and their applications, starting with artificial neural networks (ANNs).

>>> Follow us on Twitter, Instagram, and LinkedIn for constant AI-related insights and news.

>>> Sign up for our newsletter here.

----------

[1] Weights show the effectiveness of a particular input. For example, the more the weight of an input, the more it will impact the network. Biases are constant additional parameters that help the model fit the data. They guarantee that the neural network will still be active even if all the inputs are zero.